OutSystems provides many options when it comes to integrating with external systems, and it literally opens the door to connecting platforms that by all rights, shouldn’t connect. That power of bringing disparate systems together also comes with complications, particularly when you need to undertake heavy post-processing of that same data.

As you receive data, whether from API endpoint, or external database connection, the steps required to post-process and ingest the data can sometimes lead to database deadlocking or other consequences from receiving that data in simultaneous calls. As an example, if you’ve exposed a REST API endpoint to receive updated customer data from a legacy system, you might have to validate the customer exists, and if the legacy system is the Source of Truth, re-run calculations based on the new customer status.

At the same time that these re-calculations are taking place, consider a new customer update coming in to the API endpoint, requiring the same calculations. Or during the first attempt, an end user retriggers the same customer upload — the end result being a database deadlock error as it processes at the same time.

So the problem we’re looking to bypass is how to avoid data synchronicity issues, or database deadlocking? One possible option is to make use of the CommitTransaction action within OutSystems. By including this in your action flows, you force the database to finalise the transaction, releasing the hold on that record, and allowing you to move on. The downside with such a solution is that in the event of an error during the transaction, you will only benefit from a partial database rollback — up until the most recent CommitTransaction or completed action.

With a series of database commits in an action, the chances are you’ll end up with a partially committed transaction and an error. For data integrity, definitely not the best outcome.

The solution that I like to implement is to disconnect the receipt of data from the processing of data.

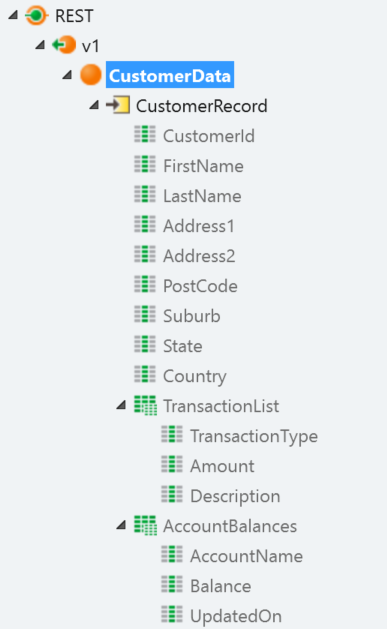

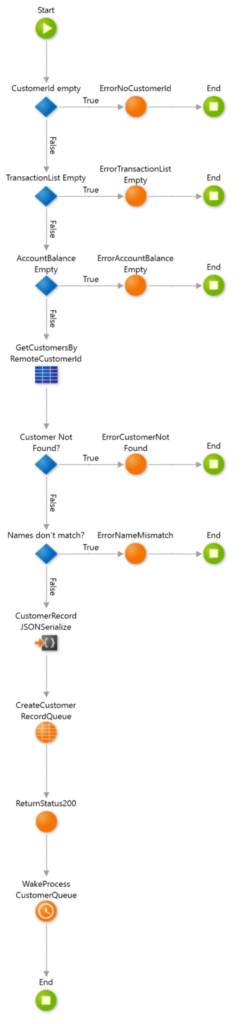

Consider a REST API endpoint that you have exposed, and it can receive a payload consisting of a Customer and their details. It might also contain a list of transactions or account balances.

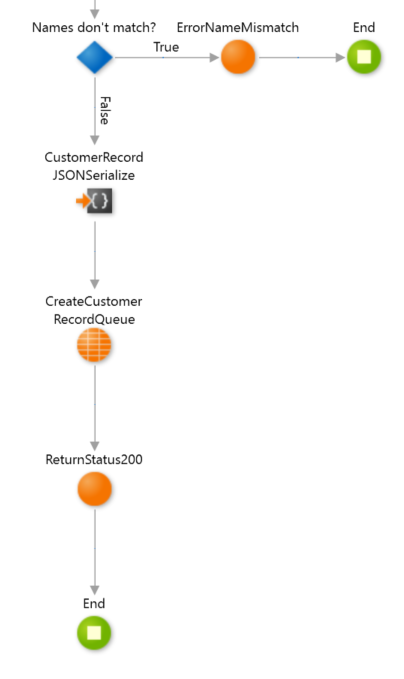

To create the first break in the transmission, we have an action in the API endpoint that first checks if the Customer is valid within the context of our application. We might check to make sure the Customer exists, and if the name matches what we already have. This simple validation is fast, and allows us to return a status code to the caller so they can complete their transaction.

However, before we return the status code, we want to deal with the data that we’re happy with. We could, if we wanted, recreate the contents of the payload in an entity. In the situation above, we would need a Customer entity, and then entities for the Transactions and Account Balances. This quickly becomes unwieldy, and every time the structure changes you need to modify the entities to match.

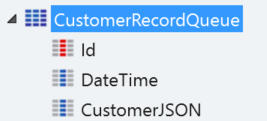

A good option here is to create an entity with a couple of attributes, the most important being a text attribute.

What we place in the API action is a simple JSONSerialize action, and then we write the JSON to the entity. This takes the payload, converts it to a JSON string, and then we store it in the database. The API can then move on and send the status code to the legacy application.

The main concern you’ll have here is the length of the text attribute — you’ll want it to be long enough to ensure you can contain the payload without encountering an error. This is perhaps the weakest element of this solution — particularly as the larger the text element, the more resources required to store and retrieve the record.

We’ve now achieved our first objective, which is to disconnect the receipt of data from the processing. Now we want to process it in an orderly fashion.

Two possible options present themselves — Light BPTs and Timers. With Light BPTs you can send each record to a light business process and they can run concurrently, but in this scenario we’re specifically looking at running the post-processing in a sequential manner. So for now, we’ll avoid Light BPTs.

Timers are a great way to deal with data queues, you can either schedule them to run at specific times, or even trigger them to “wake-up” when you receive the data. What we’ve done in the first step is create our data queue, and now we can process the data.

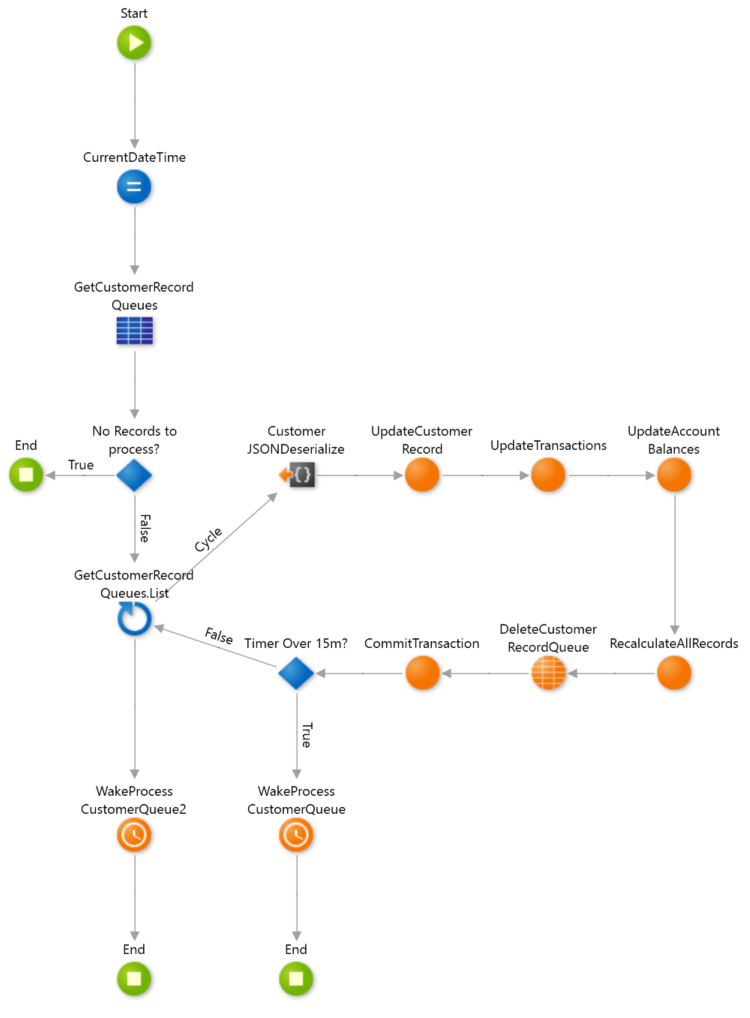

The structure of our timer is going to be pretty standard, and what we’ll do is ensure that we can cater for 1 record, or hundreds of thousands of records. The important step here is ensuring we don’t time-out the timer and cause all the processed records to roll back. For this purpose, we’ll create a local variable with the current time so we can check if we’ve exceeded the time-out.

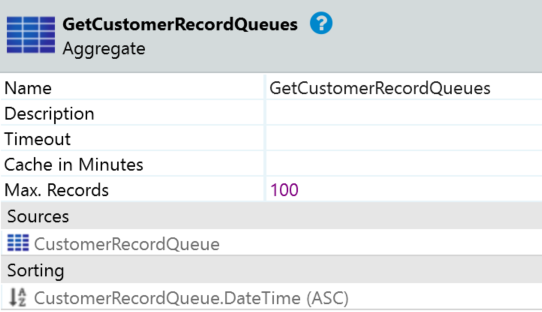

Next, we want to grab the records from our staging entity — it’s best to grab them in chunks rather than the whole result set. And now the post-processing begins!

As we cycle through each record, we firstly need to deserialize it from our JSON string into a structure. We can then pass that structure through our normal processes that had previously caused us problems. We create or update our transactions, and update the account balances, and run all the process-intensive actions on each record.

As we come out of each cycle, if we’re happy that we’ve dealt with that record, we delete it from our queue and commit the transaction. We then need to check our Timer progress against our pre-defined limit (usually a site property like 15 minutes). The default time-out for a Timer is 20 minutes, so that gives us 5 spare minutes to finish the last transaction without causing an error.

If we’re under that time gap, we continue on to the next record. If we’re over, we break out of the loop, call the Timer to wake and end. Lastly, if we’ve finished processing the batch of records, we wake the Timer again so it can process the next batch. The final step is to add a check after we’ve grabbed the batch of records, and if the result is empty, we end the Timer.

We can finally go back to our API action, and after we’ve adding the JSON record to the entity, we can wake the Timer to process the data.

And with that, we’ve turned the asynchronous receipt of data into a disconnected orderly processing of data.

Just a final word on the processing of the data, and if there’s a chance that an error occurs during that processing. I would try to recover the data by calling the source system asking for that one record, and then placing it into the queuing system like all the other records. You could archive the faulty data and remove it from the queue, or notify a data admin. The important step is to remove if from the queue so it doesn’t block processing the remaining records.

Mike Samuel

Senior Developer

Connect with Mike on LinkedIn

This article was originally published on Medium.